This post has been a long time coming. I’ll start by painting the context to explain the challenge:

- Big corporate IT system replacement program

- Involves a new architectural paradigm and re-engineered business processes

- >200 full time resources on a multi-year development and roll-out cycle

- SDLC aspects use scaled up Scrum (> 20 Scrum teams)

- Here’s the kicker: due to particular constraints around the architecture and revised business processes, we needed to deploy a critical mass of new architecture and business processes together in order for the solution be internally cohesive i.e. as much as we hated to go this route from a risk point of view, the first push had to be a big-bang. We did take smaller atomic subsets live earlier but there was no getting around the big-bang.

Coming into the start of the year, I volunteered to handle the commissioning phase planning and execution coordination. This included the planning for the build-out of the Production host environment that would host the solution as well as the system deployment coordination leading up to the go-live.

Challenge: Our Agile tooling was inadequate for the commissioning phase

In a purer agile model we would be taking small increments of solution delivery live relatively frequently, something like the picture below. Scrum demands that you deploy all the way to operational usage at the end of each sprint. That’s relatively easy to do at a small tech start-up and more complicated at a big corporate were there are various degrees of role separation between the developers and the deployers. Yes, it is an anti-pattern and all going well, will eventually be cured by a fully automated deployment pipeline – a subject for another post. For now I’ve drawn it as a three sprint batch that is buffered as a release.

In truth even with the three Sprint buffer, we would have been happy if this were actually the case. I did however mention in the introduction that we were forced to take a large chunk live just for the system to be functional. The real situation looked like this (ouch!):

The Scrum teams in the SDLC cycle had been continuously deploying to the integration testing environment (“Dev-Test”). The solution then languished here for an extended period of time because it would not be functionally cohesive until partnered with additional business processes to create an atomic whole in the new business paradigm.

With such a big piece of tech needing to be commissioned and a limited deployment window (we still have over a hundred thousand customers in the pilot country so the allowed downtime was a window of a few hours), Sprint planning and post-it notes were not going to suffice as the mechanism to pull off this execution. We would not be able to transparently see the constraints: the team was too big and the tech too complicated for people to hold the dependencies in their heads.

Step 1: Create an execution model using predictive planning techniques (enter: Gantt)

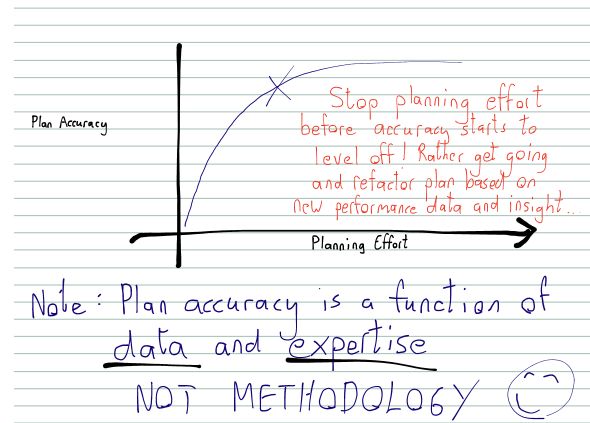

Unlike the SDLC process which is creative and iterative, we needed the deployments to be utterly predictable: the business risk was high and the short deployment window was going to be unforgiving to execution glitches.

We needed to appropriately model the data migration and solution deployment sequence with full transparency of all dependencies. In order to optimize the execution time, we also needed transparency of the critical path (spending time tuning anything other than the critical path would be a waste!).

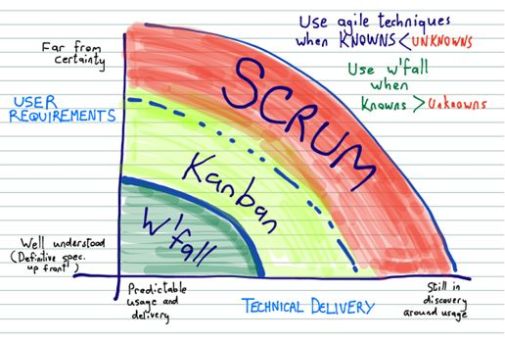

Personal disclaimer: I don’t have any default preference between project management methodologies. Like anything in engineering: choose the right tool for the job and if you understand many techniques then you can leverage different approaches to solve the problem at hand 🙂

…So after a long break I blew the dust off MS-Project and got stuck in, working closely with all the propeller-heads to create an execution model that satisfied the various constraints that became apparent.

Once we could see the critical path we were able to check that the allowed deployment window was indeed feasible and find ways of tuning the execution from a time point of view. The final sequence including once-off data migration activities involved over a hundred atomic tasks that had to be executed across 30 or so people in a few hours (and yes, that’s after automation…).

Step 2: Create a view for the Team that abstracts away the planning complexity (enter: Trello)

In manufacturing operations, shop-floor workers are interested in three things:

- What to make

- When to make it

- How to make it

i.e. the people on the floor don’t need to be bothered with full supply chain visibility or plant flow constraints (that’s why you buy finite scheduling software). Taking this philosophy into the challenge at hand: I had the answers to the three questions above but I needed to present it to the team in a way that was:

Easy to access, showed (1),(2) and (3) and provided real-time transparency of where we were in the sequence (we had teams in separate geographic locations). I’d been using Trello for some teams that we were coaching and was impressed with it’s slick online Kanban features.

Porting from MS-Project to Trello

There was an initial challenge of porting from MS-Project to Trello but that turned out to be a breeze using Excel as a bridging mechanism. I would typically copy the “Task Name”, “Duration”, “Start” and “Finish” from MS-Project (they are all conveniently next to each other on the default Gantt view

…and paste all tasks into a spreadsheet. Several things happen in the spreadsheet:

- Delete parent tasks

- Concatenate the start time, description and task duration to come up with a better Trello task description (simple Excel formula). I also deleted leading spaces created by MS-Project’s indents.

- Reorder the task sequence so that it was based on the start time (oldest to newest)

An excerpt is shown below. The “Trello name” is the generated name in the far right hand column.

Once the previous steps were done (they only take a few minutes), the entire “Trello name” column can be copied and pasted into Trello (see screen grab below).

Trello allows you to import up to a hundred tasks at a time. As we had over a hundred I had to do it twice. The entire process going from MS-Project to Trello took about 10 minutes in total with checking!

Trello allows you to import up to a hundred tasks at a time. As we had over a hundred I had to do it twice. The entire process going from MS-Project to Trello took about 10 minutes in total with checking!

The one part I wasn’t able to automate in Trello was the color coordination of tasks (for better readability) and the addition of the resources to the tasks. Although it’s possible to write a parser and use the available Trello API, we didn’t have the time to go that route this time ’round 🙂

Step 3: Empowering the Team with a ‘Live view’ of the go-live execution

The end result is a prepped deployment board that allowed everyone to see what they needed to do and when they were expected to do it without needing to understand the intricacies of the planning process. They can also see which tasks are in motion and which things have been completed (people move their tasks as they do on a traditional Scrum board). This allowed people to be ready when it was their time to deliver on something. We aimed for a near-zero communication loss between parties and as Trello was a live view on the deployment, everyone was able to get themselves setup to act in advance of their ‘cue’!

The picture below shows the board in the latter stage of the execution (no tasks left in ‘Planned’). Unfortunately I didn’t have a screen capture from earlier in the day when the originally imported plan was intact.

Step 4: Weekly ‘dress rehearsals’ for practice and fine tuning the plan based on empirical performance data

At this point in time, I had a comprehensive execution model on the Gantt and an elegant way of presenting those tasks for consumption by the deployment team resources (using Trello). As the Production environment was new (no consumers yet) we were in a position where we could use it as a staging environment until the actual go-live. What we then decided to do, is to run through the full deployment execution as a dress rehearsal once a week. This would:

- Give everybody practice on the execution process

- Allow us to refactor the Gantt model based on empirical performance data (everyone captured the start and end times of their tasks during the dress rehearsals). This made the plan more accurate.

- Expose gaps in the Production environment as part of each dress rehearsal (the smoke tests had to go green each time and if they didn’t, the techies would troubleshoot)

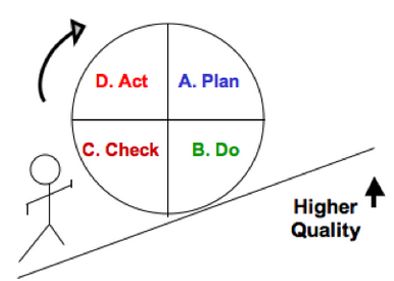

In effect we were running a “Plan Do Check Act” Deming Cycle:

- Plan the execution on the Gantt

- Present the plan for execution using Trello as an online Kanban board

- Gather the execution statistics and note Gaps found

- Refactor the task duration on the Gantt using the empirical performance data

…and repeat every week starting two months before Go-Live

Execution Tip: Keeping everyone informed

We created a Trello task called “Deployment Commentary” which I used to keep all stakeholders informed as to where we were with the execution. As the coordinator of the deployment I was fully aware of the actual state (because I was monitoring the Trello board and orchestrating the actions) but in the background I was reconciling the critical path tasks against their planned start and end times on the Gantt chart. This meant that at any point in time I knew whether we were running ahead or behind of the planned execution and I knew the number of minutes. As each Critical path task was done, I checked the Gantt, worked out the delay and posted on the commentary so that EVERYONE knew what was going on from a single source. Most people had configured alerting on that task so they would get the update pushed directly to their smartphones or e-mail. It also limited the number of people coming into the Command Center to ask “where we’re at?”.

So how did it all pan out?

As the famous (South African) golfer, Gary Player was credited with saying: “The more I practice, the luckier I get”. The crew completed the solution deployment and data migration an hour ahead of time and handed over to the testing team for deployment confirmation. The system went live smoothly and was fully operational the next day and all was well with the world 🙂